I've been thinking about this a little more. To be honest, I don't like how it's done right now, as it is really unintuitive.

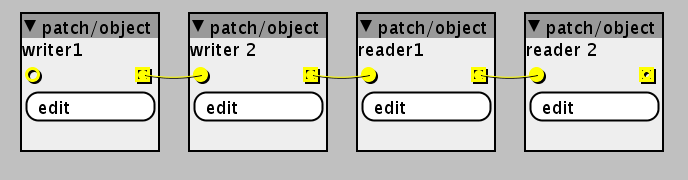

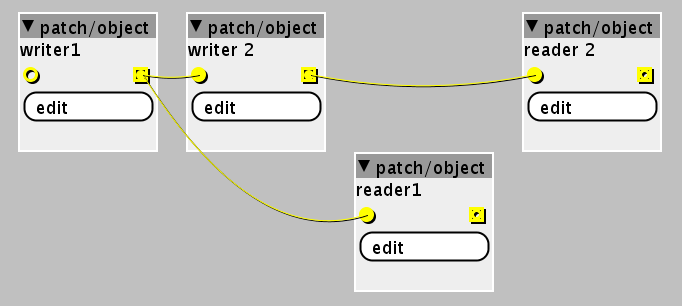

- Depending on the patch, you can get all kinds of weird phasing issues (including the one with my reverb, that initially started this thread). The biggest problem to me is that shifting objects around without changing any of the cables can actually drastically alter the sound (which you just wouldn't expect to happen!). You might find your patch doesn't work anymore after "cleaning" it up.

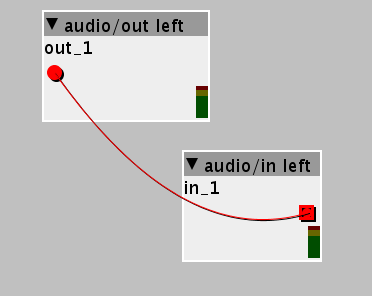

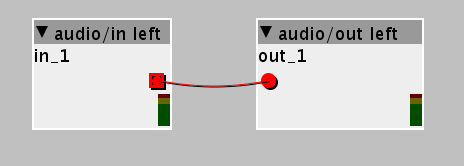

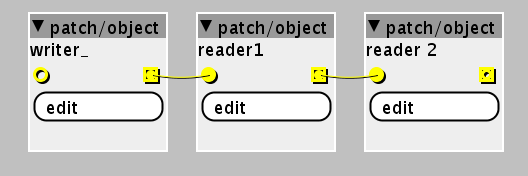

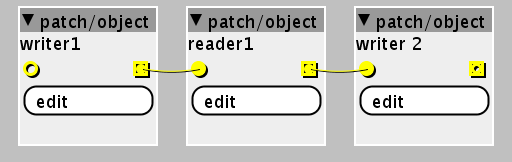

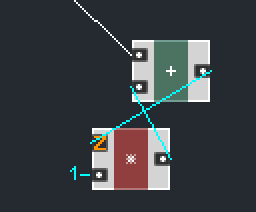

- Everytime a patch cable goes up/left it will create a 16-sample/1-block delay (

z^-16). You can get quite a significant overall delay with this and there is currently no indication that this happens. Not only does it create 0,33ms delay per cable, it also eats up significant amounts of memory for larger patches (64 bytes per cable).

One possible short-term solution could be to allow users to see and manually specify the processing order. The GUI could display a number indicating the order of execution in the top right corner of each object. With a right-click, the user could move the object up/down in the processing tree. All of this could be hidden by default and enabled upon request (e.g. by adding a menu option "show processing order" in the titel menu of the window that the user can tick to enable this).

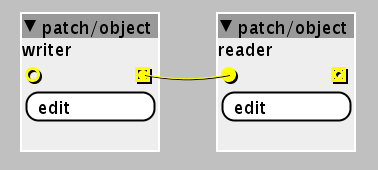

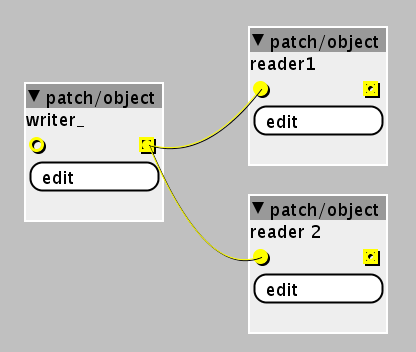

In the long term, I think it would be best to apply some graph theory to this. What we really want is to do a topological sort of the graph. This, however requires the graph to be a directed acyclic graph which is almost certainly not applying here. IMO it would be best to identify the bottlenecks in the graph (portions of the directed graph that have the least parallel edges) and insert the z^-16 delay there but I'm not entirely sure what algorithm to choose for this. In Native Instruments Reaktor, unit delays are added whenever the user adds a connection that creates feedback. The delay is indicated by a little z symbol next to the input of the node where the feedback path ends.

This could be translated to the axoloti patcher as well, but it would require to somehow convert existing patches and that leaves us with the same problem of not knowing where to add the delays.

The JUCE class juce::AudioProcessorGraph does the same thing. It builds up a ordered graph and inserts unit delays on the feedback edges. It also does latency compensation, which would be super neat as well. It defines rendering operations (clear buffer, copy buffer, delay buffer, execute node, etc.) and then iterates over the nodes in the graph and builds up a list of rendering operations that define which node to execute when, which buffers can be used multiple times and where to insert delays to compensate for processing latency of the nodes or to allow feedback. The order of processing is updated with this function, the details about the sorting are in this function.

I know, this is a lot to ask, and those are some pretty substantial changes, but the overall positive effect could be very significant.

) ... so there we can replace this with a 'half backed' solution, or one that means users have to rewrite existing patches. it has to be done properly.

) ... so there we can replace this with a 'half backed' solution, or one that means users have to rewrite existing patches. it has to be done properly.