Not trying to critisize or be negative, it's great that you do this, but I got a bit confused when I read "school for the deaf" and having a "snare pad".. I'm curious, if it's a school for the deaf, isn't an audio-based thing a bit weird to do? Is it so they can make music for others that cán hear it? (or perhaps some are just partly deaf and still can hear parts of it..) Or does it also controls lights so there's also a visual performance for the people that can't hear it? Or do you even have something that they can use to actually féél the music by touch?

Or perhaps I'm reading this completely wrong and you're building this thing WITH this school for a residence where (also) hearing-people live?

If you're making this for use by deaf people, I'm very curious to know what you are using to enable them to "hear" the music.

To come to you question:

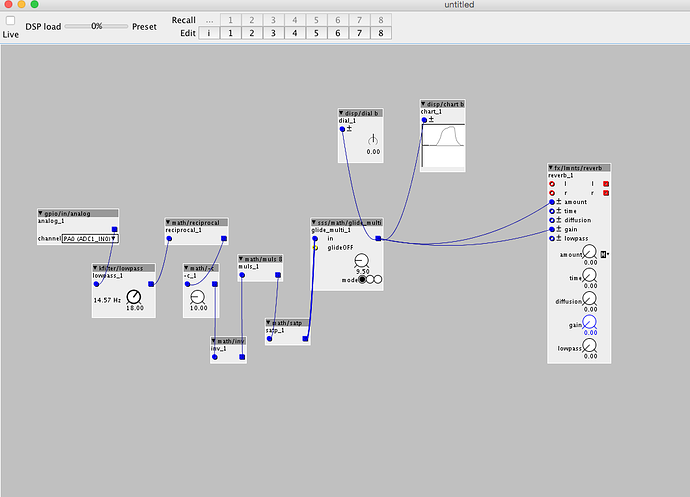

-you write that you've used a digital input (which is a "bit-sized" boolean input used as either a 0 or a 1 for off/on). Have you tried using an analogue-input module? As far as I know, the sharp sensor I used like 10 years ago had an output that maxed out around max 3.3V (please check this before connecting it to axoloti, or it might fry the board if it goes over this!) and as it's an analogue distance sensor will return a value between (as far as I remember, I can be wrong about this!) minimum 0.7V and maximum 3.3V, so you should be able to do a continuous control with this. To scale this to a "normal" fractioned 0-64 range (like the normal knobs):

-read out the maximum and minimum output of the sensor how it is received inside the patcher using a display module.

-subtract the maximum value from the minimum value to know the control range/width of the sensor

-use a "reciprocal" module and send this range value to the input (this will calculate 64/range)

-then subtract the minimum value from the incoming sensor signal to set its lowest point back to zero.

-then multiply this outcome with the outcome of the reciprocal module.

-the output of the multiplier will now be (around) 0 to 64. To make sure it won't go below 0 or above 64, you can use a unipolar saturate module (math-tab "satp").

This value can now be used to control all kinds of things with easy scaling of the range.

If the value still "wobbles" or is noisy, use a lowpass filter module fom the kfilter tab (control-rate filter) to smooth out the signal. You can also use my glide module (sss/math) which also gives you the option of linear-rate (signals change by a fixed rate, small distances take little time, bigger distances take longer time) or linear time (each change, no matter how big, will always take a certain time, though this might not work well if the input keeps changing all the time)

To use this as a mix-control for a reverb, you don't necessarily have to use the mix-control on the reverb itself, you can also use a post crossfade after the reverb and mix it with the original input. The crossfaders are at the bottom of the mixer-tab and have a pin input to do the crossfade for you to connect the scaled sensor signal to.