From the literature and from anecdotal evidence there shouldn't be any advantage to running instructions from SRAM. People seem to observe that SRAM can actually be slower due to caching and CPU pipeline structure (One example: https://community.st.com/s/question/0D50X00009Xkh2VSAR/the-cpu-performance-difference-between-running-in-the-flash-and-running-in-the-ram-for-stm32f407).

The fundamental issue is that we're taking the special case, i.e. dynamically editing an object implementation, and applying it universally. It's over-engineered; there's no technical reason for it. Yes, it might be tolerable to deal with a compile cycle because your host machine is fast. That doesn't make it correct or desirable. Compilation should only occur when someone is changing an object implementation. This approach also has the effect of making everything harder to debug. If all of that object implementation code is compiled ahead of time, it's much easier for a debugger to be aware of all of those symbols.

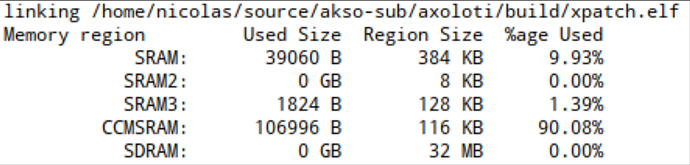

The actual object implementation can remain largely the same whether in Flash or SRAM. You would simply call into object implementations in Flash as needed. I would argue that working from Flash would be easier overall because there could less concern about the exact positions of things in memory. The patcher right now actually has awareness of explicit memory addresses on the target device, again, for no real technical benefit. It's a very brittle design.

The patch load process could just as easily target Flash actually, but then we'd get into wear-leveling concerns, etc.

The bottom line is that the vast majority of patching could easily be done completely live without any compile cycle at all. It has the added bonus of actually being simpler to work with and to debug.

Ha, sorry if this all comes across as hyper-critical and doom and gloom! I'm just trying to make this thing the best it can possibly be. It bothers me that people are dealing with what I see as self-imposed technical limitations.