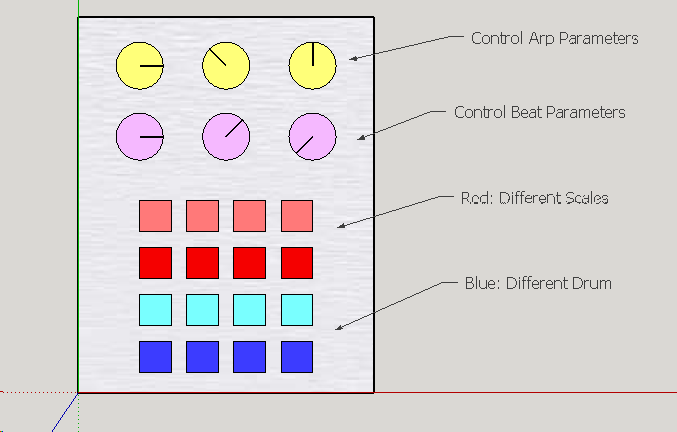

I've been making several modules lately that can take care of musical progressions.

For example, key-progression is really easy if you follow a tonnetz diagram, which could be controlled by a XY-controller to draw the movement through the keys.

For drums you could use a sequencer that features a list of different variations on several genres where people could select from. As for the sounds of the drums, I've made several drum-modules that can be changed through randomisation, so people can just hit random until they got a sound they like. Same more or less goes for all the other sounds (I've got quite some modules featuring a randomisation control).

That said, I still want to make a synth that could be controlled by using a row of knobs that control "feelings', like smiley's going from sad to happy, angry to nice, evil to good, tired to energetic etc. Then use machine learning to teach the machine what these parameters should be controlling in which way. This way maybe even a severely handicapped person might be able to create music to his or her taste. Only problem is... I don't know how to do the machine-learning part yet..